The wc command allows the develop to count the number of csv files. Create a new Notebook or clone the template. First Recommendation: When you use Jupyter, don't use df.

The normalize_orders notebook takes parameters as input. Default configuration imports from File, i. Notebooks helps you create one place to write your queries, add documentation, and save your work as output in a reusable format.UPDATE: On Databricks Runtime 6+ Python has been upgraded.

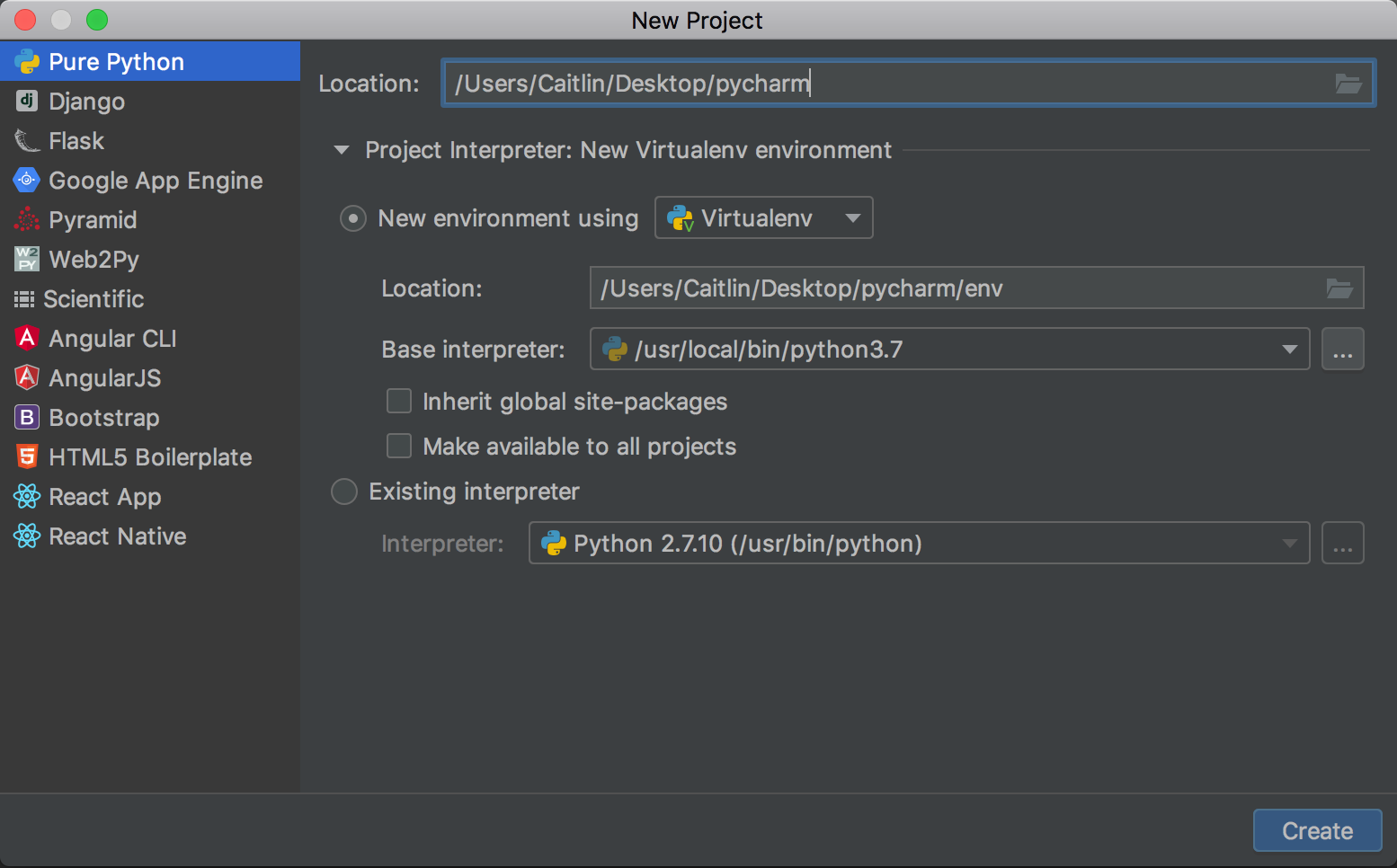

#Jupyter notebook pycharm update

2 for Genomics and below, when you update the notebook environment using %conda, the new environment is not activated on worker Python processes. Using Databricks Notebooks To Run An ETL Process Endjin. A notebook is essentially a source artifact, saved as an. PixieDust speeds up data manipulation and display with features like: auto-visualization of Spark DataFrames, real-time Spark Compare Databricks vs. Uses include: data cleaning and transformation, numerical simulation, statistical modeling, data visualization, machine learning, and much more.

#Jupyter notebook pycharm how to

If I want to see how the cell will look after running it, hold down control + return (this is how to run a cell in Compare Databricks vs. Then cd notebook folder and hit jupyter notebook in the terminal.In Databricks’ portal, let’s first select the workspace menu.A notebook is a web-based interface to a document that contains runnable code, visualizations, and narrative text. Now we can start up Jupyter Notebook: jupyter notebook Once you are on the web interface of Jupyter Notebook, you’ll see the names.According to the Jupyter Project, the notebook extends the console-based approach to interactive computing in a qualitatively new direction, providing a web-based application suitable for capturing the whole computation process, including Jupyter - Multi-language interactive computing environments. See this document for an introduction to loading a notebook from Databricks into your account to explore the types of charts you can create in your notebook. Jupyter Notebook allows you to create and share documents that contain live code, equations, visualizations, and explanatory text. You can see that Databricks supports multiple languages including Scala, R and SQL. Query for telemetry data from Application Insights Charting MLB GameDay – PitchF/x data using Jupyter Notebooks, Python and MatPlotLib. Databricks notebook jupyter As part of the same project, we also ported some of an existing ETL Jupyter notebook, written using the Python Pandas library, into a Databricks Notebook.

0 kommentar(er)

0 kommentar(er)